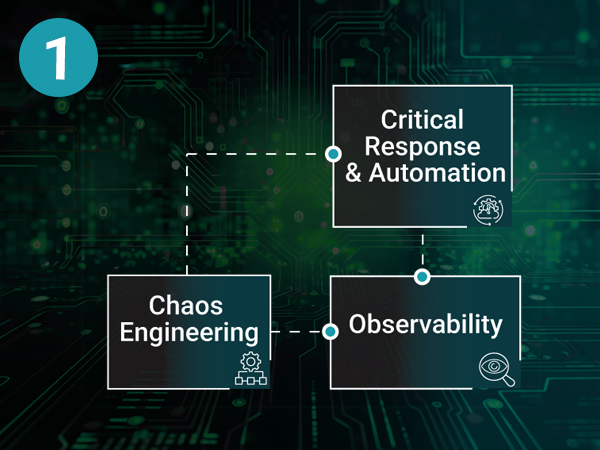

Engineering Resilience & Automation in your observability stack

Modern observability stacks can generate thousands of alerts, but visibility without action doesn’t improve uptime. Engineering resilience means detecting issues early, prioritising what matters, automating response, and proactively testing failure scenarios before they become incidents.

Critical Response & Automation

Teams are often overwhelmed by high volumes of alerts, leaving them to manually investigate issues and coordinate fixes. The result is slow incident response, longer outages, and inconsistent remediation across teams.

The solution is critical response and automation that quickly pinpoints what’s failing, prioritises what matters, and automates repeatable actions so teams can resolve incidents faster, reduce noise, and restore services with confidence.

Observability

As systems grow more distributed and complex, teams struggle with limited visibility into how services behave and interact. This lack of insight makes it difficult to detect issues early or understand root cause when something breaks.

The solution is observability providing real time visibility across metrics, logs, and traces, helping teams quickly understand what’s happening in their systems, identify issues before users are impacted, and keep services healthy and reliable at scale.

Chaos Engineering

Modern systems are complex and failure is inevitable, yet many teams don’t know how their applications will behave under real world stress. This uncertainty creates risk when outages or unexpected conditions occur.

The solution, chaos engineering addresses this by intentionally introducing controlled failures to test system resilience, validate assumptions, and expose weaknesses before they impact customers helping teams build confidence that their systems can withstand disruption.

Critical Response & Automation

Problems

Lot’s of alerts, slow resolutions, manual responses.

Solution

Understand what’s wrong going on in your system fast and fix quickly.

Observability

Problems

No insights into complex systems.

Solution

Understand what’s going on in your system, detect issues, and keep everything healthy.

Chaos Engineering

Problems

Resilience uncertainty.

Solution

Breaks things on purpose to test resilience.

Together...

LogicMonitor LM Envision unifies hybrid observability with agentic AIOps to reduce noise, speed resolution, and help prevent downtime across cloud and on‑prem environments.

PagerDuty turns those signals into coordinated incident response with on call scheduling and incident management, and adds AIOps and automation to remove manual, repetitive work running diagnostic or remediation actions and triggering runbook automation when seconds matter.

Gremlin completes the loop with controlled chaos experiments and reliability testing, helping teams find and fix availability risks before users are impacted.

Together, LogicMonitor, PagerDuty and Gremlin create a repeatable resilience operating model:

Observe > Prioritise > Respond > Automate > Learn.

Customers can standardise runbooks, significantly shorten MTTR, and continuously harden critical services while improving customer experience.

Industry Awards

LogicMonitor Recognized as Leader in AI and Cloud in SiliconANGLE’s 2025 TechForward Awards

LogicMonitor Recognized by G2 - for its comprehensive monitoring capabilities and ease of use.

PagerDuty Recignised by G2. G2 is proud to share our 2025 list of the Best IT Management Software Products

PagerDuty Recognised as CRN's - The 20 Hottest AI Cloud Companies: The 2025 CRN AI 100

DORA & NIS2

Not only is downtime expensive, in large organisations compliance initiatives like NIS2 & DORA also need to be enforced & we’re seeing this across nearly all industries that have various levels of digital first transformations.

![]() Financial Services/FinTech

Financial Services/FinTech![]() Insurance

Insurance![]() Healthcare

Healthcare![]() Public Sector

Public Sector![]() Telecommunications

Telecommunications![]() Energy

Energy![]() Retail/Ecommerce

Retail/Ecommerce![]() Technology/SaaS/ISVs

Technology/SaaS/ISVs![]() Transportation/Logistics

Transportation/Logistics

Roles

Who cares about Engineering Resilience & Automation in the observability stack?

![]() Platform Engineering Manager

Platform Engineering Manager![]() Kubernetes Platform Owner

Kubernetes Platform Owner![]() SRE Lead

SRE Lead![]() Reliability Engineering Manager

Reliability Engineering Manager![]() DevOps Lead

DevOps Lead![]() Platform Engineering Manager

Platform Engineering Manager![]() IT Operations (ITOps) Manager

IT Operations (ITOps) Manager![]() NOC Lead

NOC Lead![]() Major Incident Manager

Major Incident Manager![]() Service Delivery Manager

Service Delivery Manager![]() CTO/VP Engineering

CTO/VP Engineering![]() Head of Infrastructure

Head of Infrastructure

Key Discovery Questions

Answering these questions helps uncover risks and align your strategy with best practices in Engineering Resilience & Automation in the observability stack.

|

1 |

What does your current observability stack look like today (monitoring, logs, alerts), and what’s missing for your most critical services? |

|

2 |

How much of your alert volume is actionable vs noise, and how do you currently deduplicate or prioritize incidents? |

|

3 |

What is your incident process end to end detection > triage > escalation > resolution > post incident review and where does it break down? |

|

4 |

How do you execute remediation today: manual runbooks, scripts, or automated workflows and how quickly can you take safe action during an incident? |

|

5 |

Do you proactively test resilience (e.g., game days/chaos engineering) to validate how systems behave under failure before the next release? |

Key Discovery Questions

Answering these questions helps uncover risks and align your strategy with best practices in Engineering Resilience & Automation in the observability stack.

|

1 |

What does your current observability stack look like today (monitoring, logs, alerts), and what’s missing for your most critical services? |

|

2 |

How much of your alert volume is actionable vs noise, and how do you currently deduplicate or prioritize incidents? |

|

3 |

What is your incident process end to end detection > triage > escalation > resolution > post incident review and where does it break down? |

|

4 |

How do you execute remediation today: manual runbooks, scripts, or automated workflows and how quickly can you take safe action during an incident? |

|

5 |

Do you proactively test resilience (e.g., game days/chaos engineering) to validate how systems behave under failure before the next release? |

Continue Your Journey

Contact Us

Connect with our global team

As technology continues to reshape industries and deliver meaningful change in individuals’ lives, we are evolving our business and brand as a global IT services leader.